Sound of the Future: The Tools You Need For Immersive Audio Work

If you’ve been reading this site lately, you’ve probably seen some of our recent posts about game audio and immersive audio. If you’ve got a love for sound and new technology, by now you probably want to know how you can get started actually working in this field for yourself. Great—we’ve got you covered!

Below is an overview of some of the top toolsets out there so you can start exploring the wonderful world of spatialized audio in VR, AR and 360 film. We’ll be comparing and contrasting features as well as giving you the bottom line review on its overall effectiveness.

If you need a refresher on the terms mentioned here check out our recent article where we bring you up to speed on all things in immersive media. If you’d like to go even further with all this, and really get your hands dirty putting spatialized sounds into a VR game in the Unity game engine, consider attending our upcoming 4 Day Game Audio Workshop Intensive at San Francisco State University in June.

Facebook Audio 360

We’ll start with the 360 film and video world. As you may know, Facebook purchased Oculus, maker of the Rift virtual reality headset, which has given a huge boost to their presence in the immersive media world.

What you might not be aware of is that, besides buying Oculus, Facebook also purchased a company that was producing spatial audio plugins for game engines, dropped its game engine support in exchange for video support, and then renamed thee tool “Facebook 360 Workstation”. You can download this tool for free and the plugins will work in Pro Tools, Reaper, and Nuendo—basically anything that supports VST or AAX plugins.

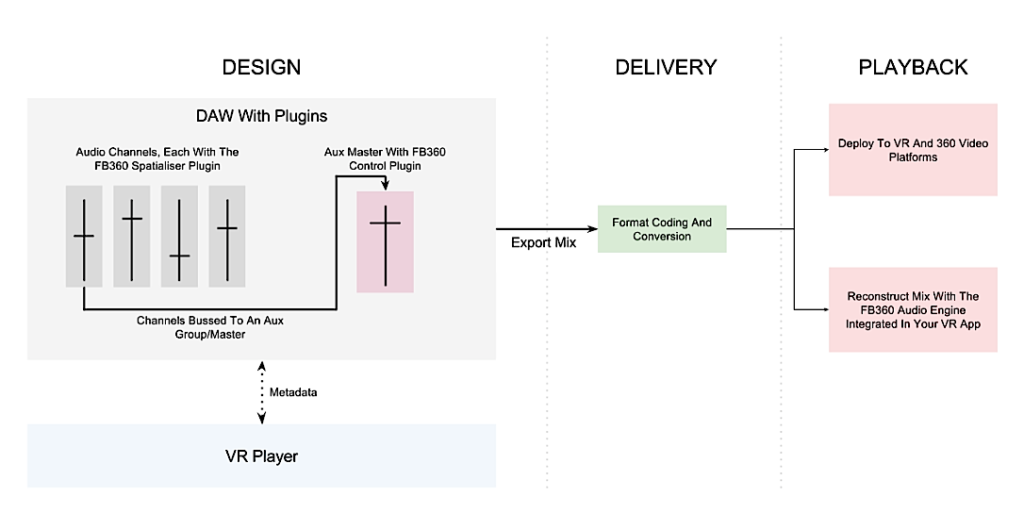

The basic output of the toolset is a specially formatted ambisonic audio file—a “.TBE” file—that is then joined with the video afterwards (often inside of a game engine) though the actual project might be a non-game application that plays videos instead. There are three main plugins as part of this toolset:

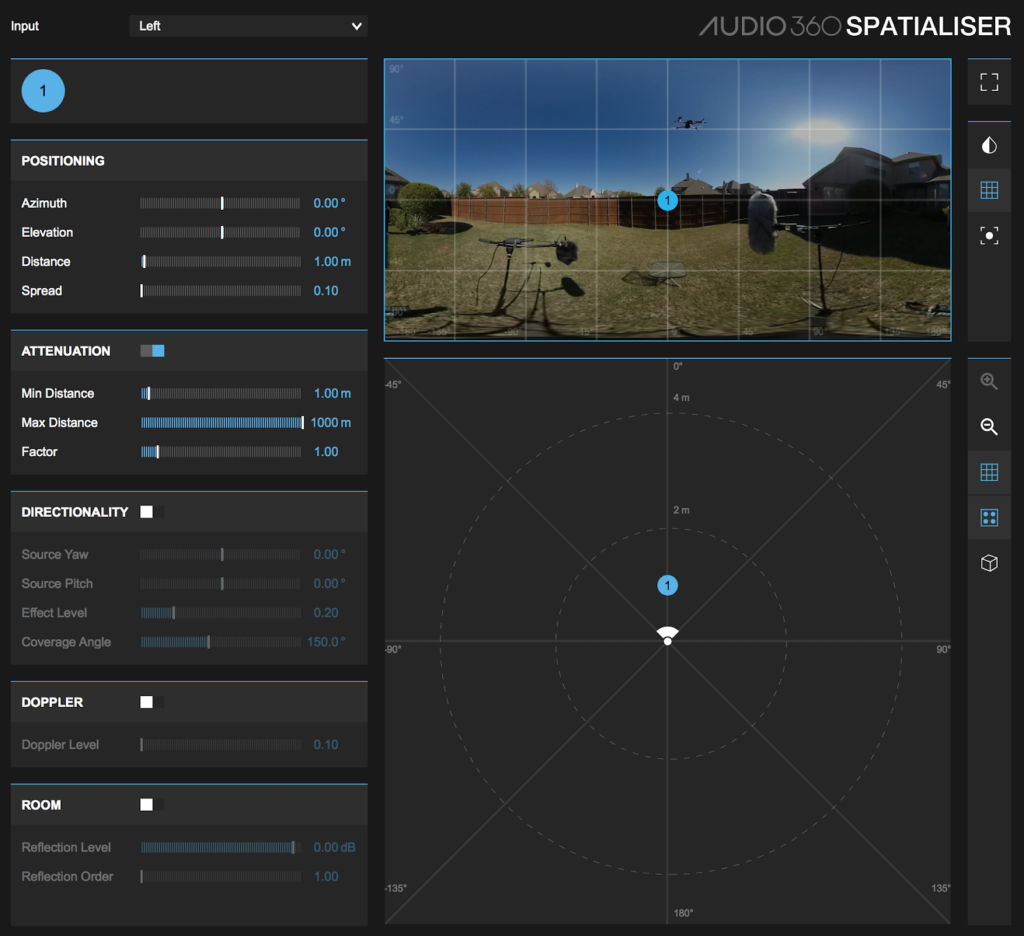

Audio 360 Spatializer

This plugin runs in the DAW on each channel where you place a spatial object to be located. It allows you to display the 360 video you are working with so that audio objects can track along with elements in the video using automation features including azimuth, elevation and distance, Doppler frequency/phase shift, and room reflection level.

This plugin runs in the DAW on each channel where you place a spatial object to be located. It allows you to display the 360 video you are working with so that audio objects can track along with elements in the video using automation features including azimuth, elevation and distance, Doppler frequency/phase shift, and room reflection level.

Audio 360 Control

This plugin is meant to run on an auxiliary bus or the master output and coordinates the data from each Spatializer component, offering realistic room reflections so that each channel can be heard as a spatialized object in a physical space.

This plugin is meant to run on an auxiliary bus or the master output and coordinates the data from each Spatializer component, offering realistic room reflections so that each channel can be heard as a spatialized object in a physical space.

The Control plugin is effectively the master output for all of the spatialized tracks, and allows a lot of control over both the spatial quality and the video playback settings on their device.

Audio 360 Converter

This plugin converts the final output file of the Control plugin so that it can be delivered as a .TBE file, using the AmbiX or Furse-Malham (“FuMa”) formats.

It can also convert existing ambisonic audio files in the other direction, so you can manipulate them with the Audio 360 toolset.

By default, a .TBE output file contains 8 or 9 channels of head-tracked audio and 2 channels of head-locked audio.

There’s also a metering plugin that is both LUFS and dBFS based which can be useful. Check it out at this link: https://facebook360.fb.com/spatial-workstation/

Oculus Native Spatializer (ONSP)

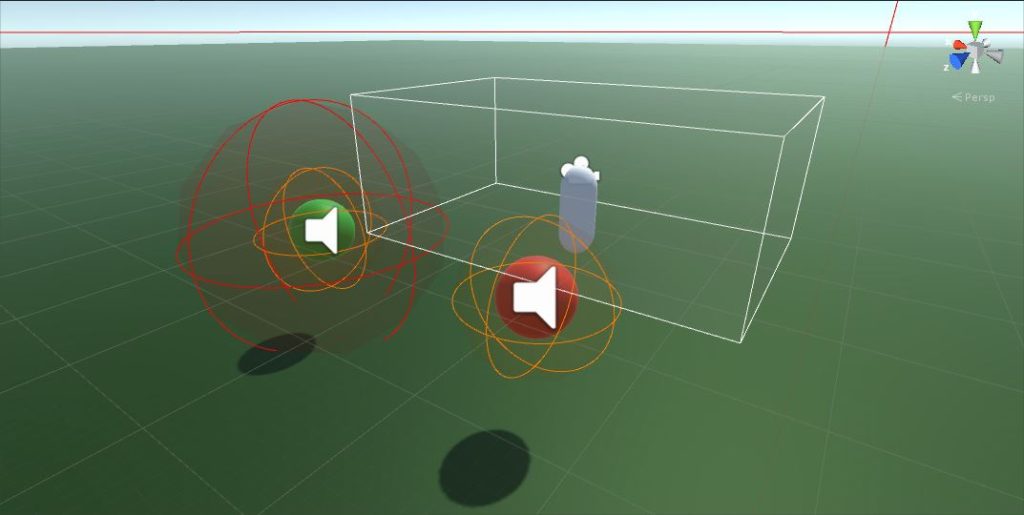

Now we get to game-oriented VR audio. Oculus was the first to create a convincing audio spatializer for Unity, and the basic version of its spatializer has been standard for years, since the release of Unity 5.4 and higher (including Unity 2017-2019). It has the following features:

- Compatible with all Oculus devices including Oculus Go, Quest, Rift and Rift S and GearVR

- Standard HRTF-based spatialization

- AmbiX ambisonic playback support

- Improved near-field perspective

- Basic room reverb

- Spherical propagation for audio sources

- No Web-based VR support

If you want ambisonic file playback support, it’s already integrated with Unity 2017 using AmbiX formatted files. It also features a VST plugin for previewing audio only.

Bottom line: if you’re looking for basic spatialization, this will do a decent job. However, it is lacking in fairly standard middleware features such as occlusion and geometry based room modelling, though Oculus has a beta version of sound propagation which functions similar to geometry based modelling.

Download full SDK version here: https://developer.oculus.com/downloads/package/oculus-spatializer-unity/

Valve’s Steam Audio

This interactive musical garden demo built in Unity has generative music with spatialized audio handled by Steam Audio.

This is an another spatializing tool that started out with a different name: Phonon. It was purchased by Valve and renamed Steam Audio. Unlike Facebook 360, the code is completely open-source, and today it remains focused on spatialized sound for games and interactive media. Here are some quick facts:

- Works with all Steam VR based headsets including Vive, Vive Pro, Vive Focus, Vive Cosmo, Valve Index.

- Does not require steam or a VR system to work

- Custom HRTF is available

- Supports listener-based occlusion and partial occlusion

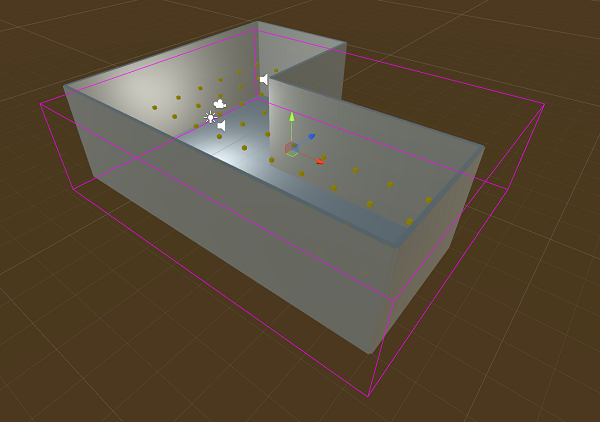

- Room modelling is entirely geometry-based

- Audio Sources are spherical but can be modified with occlusion

- Offers “Baked In” reverb and reflections to save on CPU

- Supports Ambisonic file playback for both head locked and head tracked sounds

- Not built into Unity natively must be downloaded

- Supports FMOD Studio integration

An example of how Steam Audio uses Reverb Probes to set up realistic reflections in a physical space.

Steam Audio is focused entirely on game and interactive audio and does not feature a plugin or any kind of integration with a DAW.

Bottom Line: Steam Audio is a very robust and complete solution for spatialized audio in Unity, Unreal or other engines, with or without any headsets involved.

One of its primary innovations is using the concept of “baking in” reverb and reflection characteristics of a particular space, thus saving CPU power, rather than having to calculate room reflections on the fly. It has recently improved its ambisonic file playback support as well, thus making this a top-notch free tool for spatialized audio.

You can download it here: https://valvesoftware.github.io/steam-audio/

Google’s Resonance Audio

Resonance was formerly named Google VR Audio, and like Oculus’s ONSP, it is now included in a basic form in Unity 2018 and upwards. The full version requires a download to gain access to all the features.

The GAI VR game lesson Eagle’s Gift (soon to be released) features spatial audio using Google’s Resonance Audio. You can experience a preview version of this at our upcoming workshop!

Facts:

- Can be used with any Google VR system including Cardboard and Daydream

- Can be used without a VR device

- Supports more directional audio sources (not merely spherical)

- Supports listener based occlusion (though not partial occlusion)

- Room modelling improves on ONSP by offering material choices for each wall

- Provides geometry-based reflections and baked reverb

- Supports ambisonic file playback (AmbiX/SN3D)

- Works With FMOD and Wwise with integrations for both available

Bottom Line: Google Resonance is a strong competitor for Steam Audio and offers nearly identical features, but slightly better performance in most areas. About the only drawback is its lack of support for partial occlusion. Resonance makes up for that with a variety of features however. For instance, not only does it support ambisonic file playback, but it allows you to record your game’s audio spatialization as an ambisonic file. So you can create an ambience with a lot of spatially moving elements and then save this output as a complex background ambisonic ambience file for playback, saving your CPU in the process. Pretty nifty!

Download Google Resonance Unity integration here: https://github.com/resonance-audio/resonance-audio-unity-sdk/releases

That covers the most popular platforms for working with immersive audio, and while there are other excellent tools in the world, such as DearVR and Rapture3D, most of these are commercially-licensed products and not as commonly used in the industry at this time.

Audio Design Considerations for Immersive Media

Okay, now that we’ve covered the basic tool sets you can check out for yourself, we’ll close with a bit of information on what it means to design sounds and music for this emerging medium. Here are a few tips to keep in mind if you want to get into the field.

The Only Limiting Factor is the End User’s System

Keep in mind that whatever limitations there are in terms of audio such as voice count or CPU resources available come from the operating system and hardware that you are designing sound for.

So, in the case of Microsoft’s HoloLens headsets, the one bottleneck is Microsoft’s .NET platform and the mobile processor the headset runs on. Similarly, anything from Google using the Android system is subject to its limitations as well as the chips used in the device. Due to the wide range of Android smartphones, you may get different audio performance out of different devices, even if the operating system is the same and they are running the same app!

Most standalone devices that are entering the market, such as Oculus’s Go and Quest and HTC’s Vive Focus, use Android-based operating systems as well. (One slight exception to this is Magic Leap’s Lumin OS which is a Linux-based system somewhat different from Android, though compatible in certain ways.)

Know Your Medium: Immersive vs. Assistive Audio

What does this mean in terms of creative music and sound for an VR, AR or MR-oriented app or game? Although there is plenty of crossover and variability, a general rule of thumb is that the more the application is concerned with being entertainment-oriented, the more freedom and opportunity you have to create more immersive soundscapes and compelling music soundtracks. Conversely, the more that the application serves a specific function for a user in the real world, the less freedom you’re likely to have, and the more the design needs to be less intrusive and more assistive in nature.

Thus, you will have the most room for creativity in VR-oriented apps or games since, they are the most immersive media available and most often uses headphones, enabling a complete user experience with spatialized (head tracked) and non-spatialized (head locked) elements. Look at music oriented games like Beat Saber or I Expect You To Die for examples of VR games that make full use of many of the possibilities of immersive audio.

MR for entertainment would be the next most creative medium to get involved in. These applications may or may not use headphones, so it’s probably best to be prepared for both situations. See Weta Workshop’s “Dr Grordbort’s Invaders” for the Magic Leap One headset as an example of full-blown entertaining immersive sound development in MR.

In last place for creative flair, AR-oriented apps with a very functional purpose will tend to have the most limited options in terms of sound design, and will probably feature little to no music. The sound design will have to be carefully managed, and may only be heard using a mobile device’s speaker, so deep thrumming bass is not going to be heard, except in headphones.

Sound design in this context will likely go through several iterations to get just the right timbres that will get the user’s attention, yet not compete with outside sounds or sounds from other apps.

This is all part of an emerging field called “sonification”, which means using specially designed audio to give cues and assist users in navigating both interfaces and data. It’s been around for a bit, but with the emergence of more immersive hardware and headsets for the enterprise markets, in addition to innovations in the automotive industry needs, sonification has gotten a real boost as a discipline, and audio design work in this sector will likely continue to grow for some time to come.

Allright, we’ve come to the end of our journey into immersive sound, and we’ll have future articles coming up, including middleware which we promise we’ll get to soon!

In the meantime, if you find yourself wanting to know more information about all these technologies, how to design and get your hands dirty actually putting audio into a game for immersive media, or working with a game engine and using audio middleware in a fun, exciting and intense hands-on environment, consider attending our 4 Day Game Audio Workshop Intensive at San Francisco State University.

We’ll be spending one of those four days covering everything we can fill your heads up with concerning audio for VR,AR/MR and 360 film/video, and we’ll be working on a VR based game project for Unity, soon to be released, that will involve spatialized audio.

That’s just one of the four days! On the rest we’ll be talking about the basic concepts to keep in mind when designing for interactive media, how game audio middleware works, and applying this into a game engine as well as learning the basics of how to get around in Unity 3D. It will be a jam-packed experience you don’t want to miss! We get much deeper into everything we covered in this series of articles but hurry, as seats are limited and fill up fast! Sign Up Today to reserve your seat!

We started the Game Audio Institute based on our experience in the industry and the classroom, where we have been developing curriculum and teaching both graduate and undergraduate courses. To really get involved with the game audio industry these days, it is essential that you understand what’s under the hood of a game engine, and be able to speak the language of game design and implementation. You don’t have to be programmer (although that can’t hurt) but a general understanding of how games work is a must.

Unfortunately, all too often these days, people are missing out on developing a solid foundation in their understanding of interactive sound and music design. The GAI approach is fundamentally different. We want composers, sound designers, producers and audio professionals of all kinds to be familiar and comfortable with the very unique workflow associated with sound for games and interactive environments. We have many resources available for budding game audio adventurers to learn the craft, some of which we’ll be using in this series. To find out more about our Summer Hands-On Game Audio Intensive Workshop, Online Course, Unity Game Lessons or our book, just click over to our website gameaudioinstitute.com.

Steve Horowitz is a creator of odd but highly accessible sounds and a diverse and prolific musician. Perhaps best known as a composer and producer for his original soundtrack to the Academy Award-nominated film “Super Size Me”, Steve is also a noted expert in the field of sound for games. Since 1991, he has literally worked on hundreds of titles during his eighteen year tenure as audio director for Nickelodeon Digital, where he has had the privilege of working on projects that garnered both Webby and Broadcast Design awards. Horowitz also has a Grammy Award in recognition of his engineering work on the multi-artist release, True Life Blues: The Songs of Bill Monroe [Sugar Hill] ‘Best Bluegrass Album’ (1996) and teaches at San Francisco State University.

Scott Looney is a passionate artist, soundsmith, educator, and curriculum developer who has been helping students understand the basic concepts and practices behind the creation of content for interactive media and games for over ten years. He pioneered interactive online audio courses for the Academy Of Art University, and has also taught at Ex’pression College, Cogswell College, Pyramind Training, San Francisco City College, and San Francisco State University. He has created compelling sounds for audiences, game developers and ad agencies alike across a broad spectrum of genres and styles, from contemporary music to experimental noise. In addition to his work in game audio and education, he is currently researching procedural and generative sound applications in games, and mastering the art of code.

Please note: When you buy products through links on this page, we may earn an affiliate commission.