Audio Mixing for VR: The Beginners Guide to Spatial Audio, 3D Sound and Ambisonics

Space: The New Frontier

As a young audio engineering student, one aspect of my studies that struck a chord with me was learning about the origins and evolution of the technology I often took for granted.

I was particularly drawn to the era when stereo recordings were first burgeoning in the hands of engineers and musicians; a time where there were no rules nor a sense of tired practice. I sometimes felt envious to be alive and working in this sonic wild west, only to find myself and every working engineer out there facing a new audio paradigm today with the rapid expansion of 3D, or spatial audio.

With the rise of virtual and augmented reality, 360 videos and every immersive media format zipping its way down the pipeline, the need for realistic, immersive audio is bigger than ever before. The concept of spatial audio might sound straightforward on the surface, but anyone with an audio background would naturally want to stop and think what that actually means.

Then the web searches ensue, only to find a huge and often intimidating wealth of terminology, applied physics concepts, research papers, and software options. Fortunately for us, there are many people hard at work to make the jump into 360 audio production both understandable and doable in our existing production environments.

Seeing as how the opportunities that are presented to musicians, producers, sound designers, engineers and virtually anyone who works with sound are growing as fast as the immersive media market, it seems like we have to filter through a mess of information to get started. Does producing 360 audio content mean having to learn something completely from scratch? Is it a considerable investment? The goal of this tutorial is to unequivocally say “No!” to all of that and provide a practical pathway to navigate this exciting new era of audio production.

Because of the multitude of directions one could go in when first getting into immersive sound, I’d like to focus more on the post-production aspect of 3D audio than the recording, as it utilizes tools that we most likely already have. I’ll also be referring to the Pro Tools environment as it is commonly used, though emerging DAWs like Reaper are quickly becoming used for 360 production. Lastly this won’t cover programming environments like Unity or Unreal, but definitely be aware that these concepts very much apply to those environments!

Breaking Your Stereo

The most common question I get about spatial audio from newcomers is “Oh yeah, isn’t that like surround?” And in some ways, it is. Spatial audio is all about the ability to “localize” a sound, where if you had your eyes closed you could imagine certain sounds emanating from a particular direction and distance.

For years, mixing engineers have tried to create this depth of field in traditional stereo and surround recordings through a multitude of techniques. At its most basic, this can be achieved by channel amplitude panning. In 5.1 surround, the listener is able to localize sounds coming from both the front and rear horizontal axis. Though this gives an approximation of location larger than stereo, it doesn’t provide the important vertical axis of height, as well as being dependent on so many variables for the end user.

There are some emerging technologies that incorporate 3D audio in sound bar designs, so 3D audio is technically possible with stereo loudspeakers through crosstalk cancellation. The application for this applies more to home theaters than interactive VR, though definitely some exciting tech to keep our eyes on.

First of all not everyone has surround setups, and even for stereo, so many people either have improperly configured speaker arrangements or are never listening in the coveted “sweet spot.” The idea that more elaborate loudspeaker configurations will solve these problems certainly exists, though when thinking in the context of VR, the end user has no need for any of this.

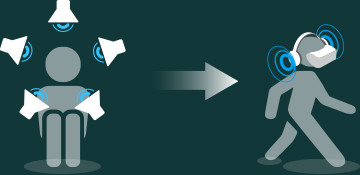

In the real world, we perceive sound “binaurally,” literally meaning with two ears. What real 3D audio is ultimately about is guiding our ears to receive localization information that includes all directions and distances from a single, binaural sound source, otherwise known as headphones.

For VR, spatial audio also has to be interactive when viewing the final product, where changes in the source’s perceived location change with user-defined motions. This is achieved by a rendering engine capable of taking metadata of user position alongside processing audio sources binaurally to headphones.

Not only does the utilization of headphones make more sense for VR applications, it also gives a great advantage to anyone who wants to either make or listen to immersive audio experiences. Seeing as how practically everyone has access to a pair already, no specialized equipment is needed to dive in.

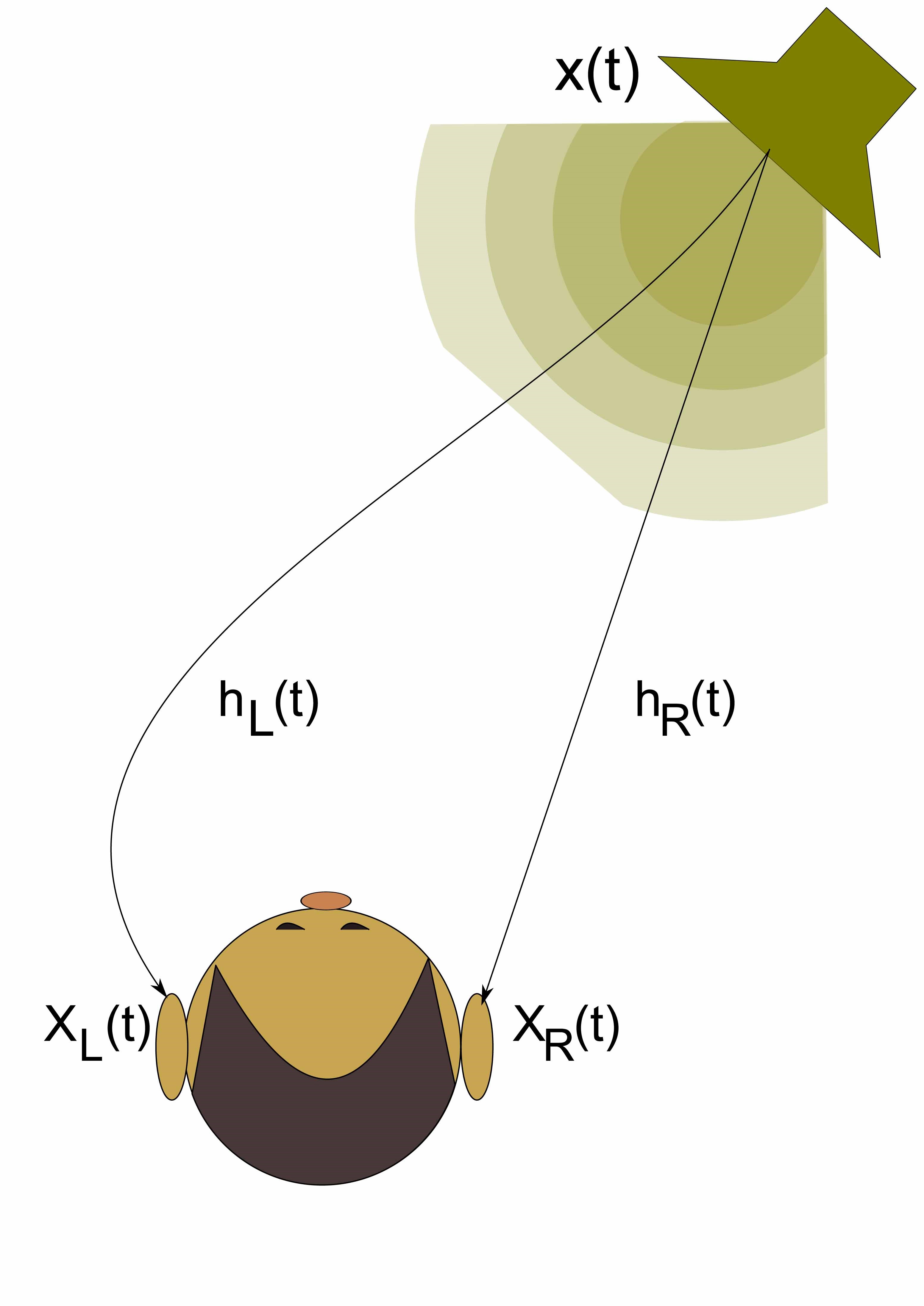

Using a complicated algorithm called a “head-related transfer function”, or HRTF, the binaural renderer mimics the psychoacoustic phenomenon of how sound arrives at our two ears from a stereo source. HRTFs account for the physical shape and size of our head and ears, as well as the difference in timing, amplitude and frequency of sounds entering either ear canal. This is based on how we localize sound in the real world.

A Picture of Objects in Motion

So if the only gear (outside of your computer and DAW) you need is a pair of headphones, how is this ultra-cool perceptual and interactive effect achieved in the production studio? Let’s say you have been given a video that was filmed by a 360 camera and are told by the client that they want to “spatialize” the audio. Let’s also say that you have access to all of the sound recordist’s audio which includes lavalier microphones on the subjects as well as an Ambisonic microphone.

No doubt you either have or will soon hear much about Ambisonics as you immerse yourself in this world. Dating back to the early 70’s, Ambisonics is a speaker independent, multi-channel spatial audio format that added the missing “height” axis. The easiest way to think of Ambisonics is in the context of microphone polar patterns. For those familiar with mid-side microphone techniques, it is basically the exact same concept but with an additional mid-side pair for height.

With an Ambisonic recording you have the ability to capture a spherical picture of the environment that was recorded, similarly to having an ambient mic on steroids to blend in with your direct signals. Not only do you get a spherical image (referred to as a scene), but the B-format can be decoded with user positional metadata to provide interactive functionality.

For “First Order Ambisonics,” the most basic Ambisonic configuration, there are four channels used to represent the sound sphere labeled W (omni-directional), X (front/back), Y (left/right), and Z (up/down). These are not the kind of audio channels we’re familiar with in that they don’t belong to a fixed speaker designation. When decoded properly, these channels comprise a sound sphere where the directionality of each axis is user-controllable. This spatial audio format is know as Ambisonics B-format.)

So if you have this amazing 3D Ambisonic recording, why do you need the lavalier mics as well? Just as with a normal mix, the combination of direct or close microphones and distant ambient microphones can sum together beautifully to create a rich spectrum of frequency content.

In the case of spatialization, Ambisonic microphones do a lot, but not enough on their own to create detailed spatial information. Think of what happens when you start to move a sound source closer to a microphone’s null point. The image is less clear with often massive changes in frequency response (though not the changes in frequency response that our ears hear naturally when moving in a 3D field). This can affect how we perceive the location accurately, which is where the reinforcement of the direct signal microphones, or “objects” comes in to play.

Any direct signal or mono recording that represents a visual component in a 360 video or other immersive media format can be placed in accordance with the action on screen. This also doesn’t have to be recorded, it could come from sound libraries or layered sounds as you would normally source for the sound design of a film.

Object-based panning builds upon the idea of a spatial surround panner, except that the object instead is moving freely around all spatial axises independent from speakers. These sound sources are being binaurally rendered in realtime without any blurring or degradation of positional clarity.

The Tools

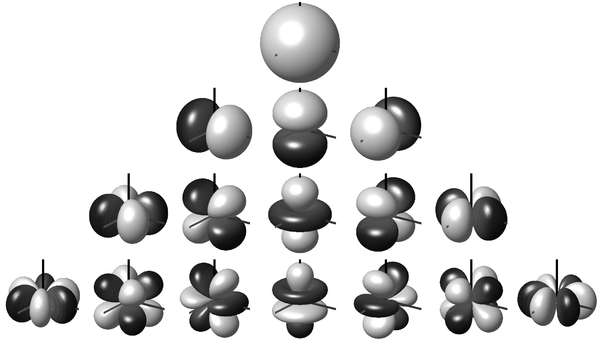

Luckily for us, all spatial audio theory, concepts and applications are made very easy to perform alongside our existing DAW production environments. As for software, G’Audio Lab has produced a free and very intuitive spatial plugin called “Works” that integrates seamlessly with 360 videos within Pro Tools.

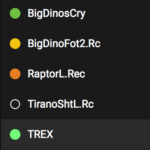

G’Audio Works lets you place objects and ambisonic tracks directly on a quicktime video to easily synchronize locations of sounds.

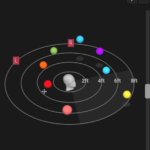

The audio tracks in your Pro Tools session will appear in Works with object-based controls such as azimuth (lateral position), elevation (height position) and distance. When moving the colorful dots on the screen, the positioning parameters are taken care of with single movements.

Works uses an “edit as you watch” approach to spatialization, where all of your object panning can be done from a single plugin window. It has a simplified plugin structure comprised of slave plugins instantiated on any track you want spatial control over and a single master plugin where you can edit these tracks with a Quicktime movie. Using the Pro Tools video engine, you can load a mono or stereoscopic Quicktime movie and visually see where your sounds should go (click on the individual files in the gallery below to see how the plugin and GUI interact).

The convenience here is twofold— the familiar environment of being able to synchronize your audio tracks with a video track is combined with the ability to place, automate and mix audio objects in realtime. These appear as color-coded dots and can be clicked and dragged to corresponding characters directly on top of the Quicktime movie.

You can toggle between 2D and HMD view (head mounted display) for first person visual reference. Using the 2D view of a 360 video is like stretching a globe onto a 2D map, where you have easy access to controlling the directionality of the objects in one plane.

Setup is quite easy too. You can have any mono, stereo, quad or surround audio track in Pro Tools communicate with the master plugin by loading a slave plugin on the track you want to spatialize. Each track immediately gets rendered to the binaural engine, so all you have to do is click the objects on the master plugin to decide where they exist in the spatial field. If your video has motion for the sounds, just make sure automation parameters for the plugin are active and write/latch/touch automation for the objects directly on the Quicktime movie.

- HMD View allows you to monitor what the end user sees in a headset.

Individual lanes like azimuth (lateral position) and elevation (height position) are automated in one go. The 3D object map on the right side of the interface can control all of these parameters as well as distance control, making automation passes very time efficient.

Everything else about mixing for video in Pro Tools is as it has always been. Solid editing, compression and EQ are still in effect, as well as utilizing any other DSP plugins at your disposal. The biggest shift in sonic workflow deals with spatial positioning, which is dependent on the 360 video you’re working with. Because the end result is interactive, it is always a good idea to check how things sound by switching over to HMD view and moving the mouse around to listen. If an object is too loud, decrease the level of that channel with the gain slider in the master plugin (volume fader automation in Pro Tools is pre-binaural renderer and is therefore not included in the spatial audio file).

I find even better results for levels by adjusting the distance slider in the plugin, which gives a true to life perception of distance. As always keep an eye on your master meter and provide ample headroom (at least 6dB oughta do it, as many devices that play back spatial audio get crunchy the closer you reach 0dBFS.)

Another important feature is that you can monitor between G’Audio’s GA5 format and FOA (First Order Ambisonics) which is currently being used in media sharing services like YouTube and Facebook. This lets you know how the mix will translate after you upload it (more on this later).

When you’re happy with the mix, simply create an offline bounce in Pro Tools, and depending on your needs you are either given a spatial audio file to encode with a Quicktime movie, or you can encode it directly from Works in Pro Tools (this is changed in the export settings).

One thing to keep in mind, is that the regular audio file that you get from Pro Tools when you create a bounce is to be discarded, as it only represents the binaural head-locked version of your mix, and is not the interactive spatial format needed for VR.

What about keeping things still?

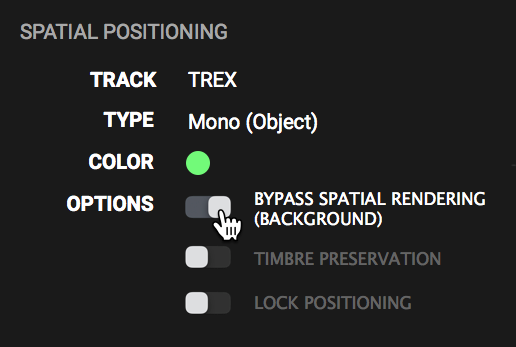

So let’s say that you’ve got your Ambisonics mic positioned just right, your individual objects moving in perfect sync with the action on screen, but the producer wants to add some background music. You load a slave plugin on a stereo music track and it appears in the master plugin as it should. When you turn the user head position however, the music gets spatialized with that motion. What if you don’t want this, keeping the stereo music “head-locked” so that it stays put regardless of user interaction?

Select bypass spatial rendering for tracks that don’t need to move around the field, like music or VO.

In Works it is as simple as switching “bypass spatial rendering” on for that particular track. If required, this can be a very effective production technique to incorporate “non-diegetic” audio into your project, so that something like music or a voiceover is injected directly to the listener. This can be very useful for narrative moments that don’t require visual attention to a particular subject, or make user movements less distracting for dialog and music.

Though this is really easy to control in the DAW environment, the implication for this functionality to work in the media’s playback leads us to the only difficult aspect of spatial audio: Where is this media being played?

Okay, What Now?

So I promised that getting into spatial audio is easy… and it is! As you can see with this workflow it is as fun as it is simple. The only head-scratching part of this process is the final destination for the media.

As formats for spatial audio are in its wild west phase right now, we don’t have any standards established for how media is shared. G’Audio responded to this problem by developing their own format called “GA5,” which is a very high quality and flexible way to incorporate objects, Ambisonics and head-locked channel signals in one package.

Because of the differences in player platforms and codecs used, there also needs to be a renderer that can play spatial audio that is agnostic to these differences. SOL, the rendering engine that plays the GA5 format, can be implemented on the player side for the best spatial results. If you have a Gear VR, you can download G’Player from the Oculus store for free, which is a 360 media player that has SOL integrated.

As their rendering technology is compatible across all platforms, it is still making its way to HMD’s and media sharing platforms. The ability to upload spatial audio to Facebook or YouTube is still in early stages as well, with both platforms providing limitations and differences from one another in what you can do.

Because of the spatial decoding available in the Ambisonics B-format, it has currently been adopted as a starting point for incorporating spatial audio in playable 360 media. The problem here is that there are inherent limitations to this format. Firstly, there are only four channels encoded to binaural with a “First Order Ambisonic” spatial audio file. This means that for any object positioned outside the given spherical harmonics (think polar patterns), the spatial effects are greatly reduced.

You’ll definitely hear quite a bit about “Higher Order Ambisonics” as a possible solution to increase spatial resolution. In HOA, more spherical axises are added with each sequential order. This isn’t practical with existing technology up to a certain point, as a much higher channel count is needed for higher orders (nine channels for second order, sixteen channels for third order, etc). While Pro Tools HD 12.8.3 has support up to 3OA (3rd order Ambisonics), there are currently no existing platforms that can play any mastered file encoded this way. Though Ambisonics is a great way to record 3D audio, it hopefully isn’t what we are limited to as an output format.

This technology changes at a rapid pace and requires keeping up-to-date as best as we can with how to share this media. At the time of this article, the following section can serve as a reference for how to share your media across different platforms.

Handy Dandy List

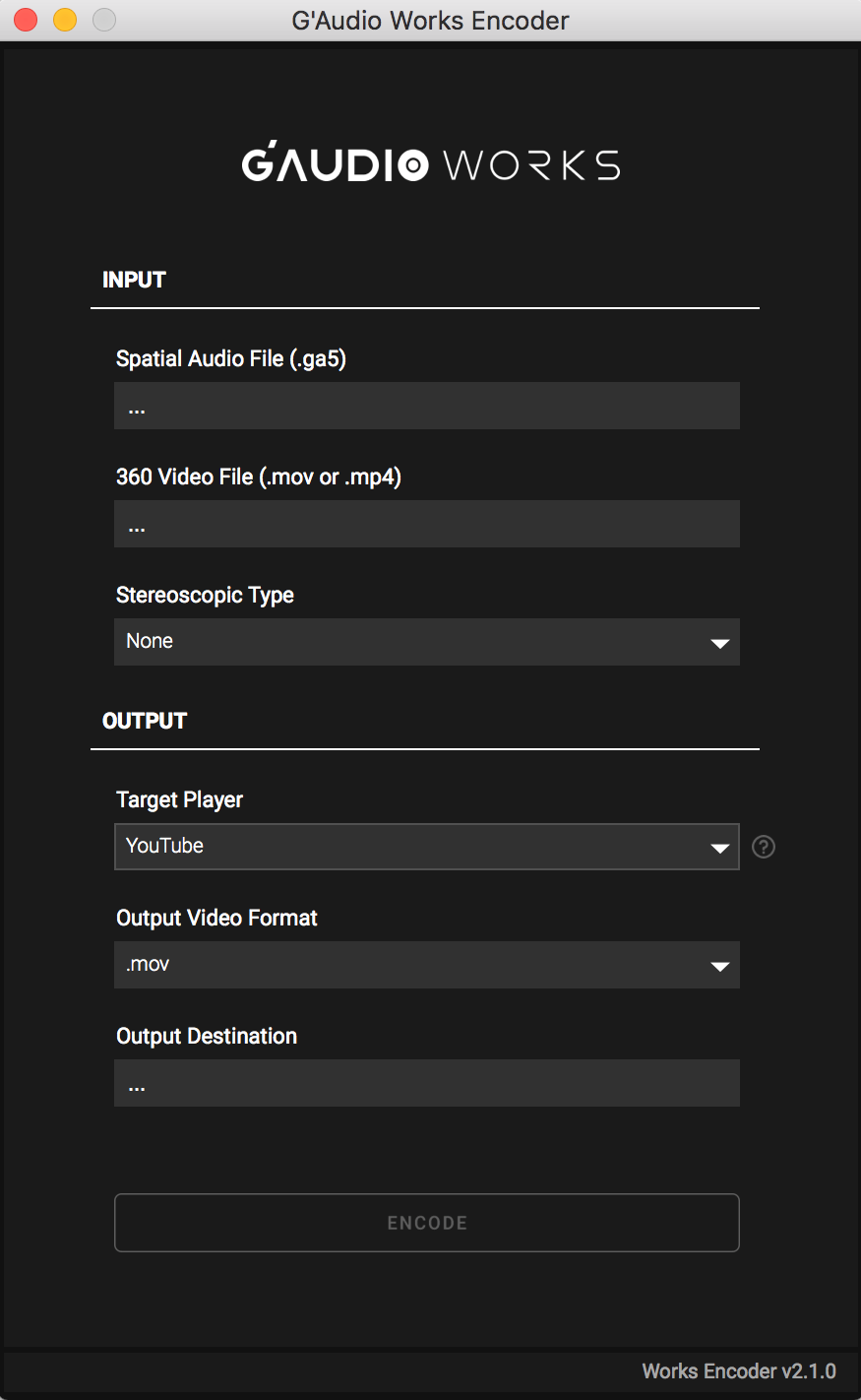

Encoders: These allow you to take different spatial audio formats and mux it with a quicktime movie for varying player destinations.

Works Plugin + G’Audio Works Encoder (G’Audio):

- Spatial audio input formats: GA5, FOA, head-locked stereo

- Output destinations: Youtube, Facebook, G’Player for Gear VR

FB360 Encoder (Facebook):

- Spatial audio input formats: FOA, 2OA, head-locked stereo

- Output destinations: Youtube, Facebook, Oculus video

Spatial Media Metadata Injector (YouTube)

- Spatial audio input formats: FOA

- Output destinations: YouTube

Online Player Platforms: These are the most accessible services that allow you to upload 360 videos with spatial audio playback. This is where you’ll find the most variances in spatial audio formats. Some are further along than others, though most players are updating options to their platform to accommodate spatial audio.

Facebook:

- Spatial audio format: supports 2nd order Ambisonics + separate head-locked stereo

- Create an Ambisonic spatial file, and any non-diegetic audio gets mixed in as a separate stereo wav file.

YouTube:

- Spatial audio format: supports First Order Ambisonics (no head-locked stereo)

- Because all four channels are being used for FOA spatialization, YouTube currently does not provide the extra stereo channel needed for head-locked audio. There is a workaround for head-locked mono sounds however. In Works, any mono sound source that is placed at either +90 or -90 degrees in elevation will not interact with lateral movement if using First Order Ambisonics. This makes that sound source stationary when moving the mouse around in Youtube.

Vimeo:

- Currently working on spatial audio but is not available as of early 2018

— Glenn Forsythe is Account Manager and Sound Engineer for G’Audio Lab, a spatial audio company developing immersive and interactive audio software solutions.

For more great insights into both mixing and mastering, try our full-length courses with SonicScoop editor Justin Colletti, Mixing Breakthroughs and Mastering Demystified.

Please note: When you buy products through links on this page, we may earn an affiliate commission.

PG

February 6, 2018 at 5:48 am (7 years ago)Very nice article!! Good job!

Dave Smith

February 6, 2018 at 9:22 am (7 years ago)I’ve been doing audio post in stereo and surround for over two decades. I’m just starting to dip into 360/VR mixing. I got a demo of G’Audio Works at AES and downloaded their free demo. Very cool. Unfortunately, it totally screwed up video playback in ProTools for my day to day post work. It took me over two months to finally figure out and track down the issue. Once I completely removed every bit of G’Audio software things went back to normal. Perhaps there was a compatibility conflict with the hardware, OSX or ProTools version. It would be great if G’Audio included an uninstaller!