Game Audio Primer: Immersive Sound for Virtual Reality, Augmented Reality and 360 Video

For an even deeper journey into the world of game audio, sign up for the Hands-On Game Audio Intensive Workshop, June 25-28 at San Francisco State University. This workshop is not to be missed by anyone interested in expanding the role of game audio in their career. Click here to register.

Greetings audio adventurers! In our last post, we went hands-on with techniques for implementing audio inside a game engine, using the Unity3D. This time, we’re going to delve into the hot new world of immersive sound for VR, AR and 360 video.

Immersive audio is a vast field, and it’s probably the single fastest-growing sector in sound right now. It’s not just for video games, either. There are opportunities for designing content for any kind of interactive experience, from arcades and theme parks to the enterprise market, to education, and there are even applications in medical therapy! The future of immersive sound and music has indeed arrived.

But along with this new technology comes a host of new unfamiliar terms. What do we mean by VR versus AR versus MR? Where and how can we apply ambisonic audio in a game? What’s HRTF? We will answer these questions and many more below. Let’s dive right in!

Let The Games Begin: Virtual Reality

Image courtesy of Stephan Schutze

“Virtual reality” is by far the most immersive facet of the new paradigm we’ll discuss today. In virtual reality, you are effectively isolated from the normal world around you and anyone in it. Even if you are playing a social VR game, you’re having your own unique experience, isolated from the outside world, every time you put on the headset.

The virtual area you’re transported to can be any size or shape, and you can be located anywhere in, on, or even under it.

360 Film & Video

One major branch of VR is 360 film and video, which effectively treats the VR headset as a huge movie screen where you can experience a curated and pre-recorded cinematic adventure

If you’ve ever gone to an amusement park and sat in one of those half-dome theaters that play crazy rollercoaster films, intense flights over mountains, or hair-raising stunts of one kind or another, you’ve got the basis for this approach—except in this case, the audience is just you.

360 video is increasing in popularity and obviously, Facebook has noticed, as it purchased Oculus to promote these kinds of experiences.

Keep in mind that this medium is actually linear, and as such, working in it is more like designing sound for film or TV than a game. In a 360 film or video however, the user who plays the video has more control than someone playing back a typical piece of linear media. They can pan around in a circle (rotation) and move their head up and down (elevation), although some videos and films only offer 180 degree filming in terms of direction.

Any sounds that stay in their perceived spatial location relative to head or camera rotation are called “head tracked” sounds, while any sounds that don’t move and rotate with the head are called “head locked” sounds. To record images for this type of media, special cameras—and special microphones—are used.

Interactive VR

Another branch is interactive VR, which includes games, but can also include other interactive experiences and increasingly, even applications. The effect here is much more immersive, intimate and, well, interactive.

This part of the field is probably enjoying the most intense hardware development at the moment, with various manufacturers coming on board with VR systems at a wide variety of price points.

The new Oculus Quest is a standalone VR device with position as well as rotation tracking via the cameras in the headset, and also comes with controllers whose position and rotation can be detected.

In both 360 film/video and Interactive VR, a headset is required for the experience and specialized controllers are needed to interact with objects or menus in the game or application. The user’s experience is a fully immersive one, involving headphones or speakers near the ear.

VR systems feature either internal cameras on the headset itself, or external sensors in order to track the user’s headset position and rotation. This is a far cry from the average casual game played on a smartphone with a single, tiny the speaker far away from the player’s face. It quickly becomes obvious how many more opportunities are available for robust immersive sound design.

(Beat Saber is an immensely popular music oriented game in VR and a great example of how immersive media can be a real physical workout!)

Augmented and Mixed Reality (AR / MR)

Let’s move on to AR (Augmented Reality) and MR (Mixed Reality). These two fields are growing tremendously, especially in the enterprise sector. New devices are being released by companies like Microsoft, Lenovo, Magic Leap, and many others. Apps and games using this technology are now becoming extremely popular.

Both AR and MR can use standalone headsets and these tend to get a lot of press—especially in the enterprise market. But at the consumer level, it’s more common to find these experiences in smartphones and tablets, as they don’t require a headset to work. (They do require a built-in camera, however.)

In the latter format, controllers are most often not used. Instead, the user’s hands may be tracked, or the device may simply track objects it sees with the camera.

Differences Between AR and MR: It’s All In the Concept

Whether you call a particular application of the technology “AR” or “MR” mainly comes down to the design concept. Here are some unique characteristics of each.

Augmented Reality (AR)

The key to this concept is suggested by its name. You are basically using technology to put something new “on top of” the reality you see every day. You aren’t replacing it completely like you are in VR. Rather, you are using the technology to help or assist you in everyday tasks. So an AR application will overlay information or controls to enable users to accomplish tasks more efficiently.

A prominent example would be the use of AR travel directions recently showcased at Google’s I/O Conference for street navigation on the new Pixel series of phones in the latest Android line.

Generally, the idea is for the added media to be non-intrusive and more functional than entertaining, per se—though the design could have more entertaining elements, especially if it is applied in a game format.

Mixed Reality (MR)

The MR approach is less strictly purpose-oriented and applied more for entertainment applications. The term was coined by Magic Leap to describe their technology, but has been used by other companies as well.

Generally, the goal is to mix fantasy and outside imagery into your current visual reality, and this mixing is made to be more convincing than in AR. Objects in mixed reality often cast shadows and appear to have physical mass similar to objects in the real world. They can have rendering, lighting and even respond to occlusion if, for example, the user placed their hands over an object, or if the object moved behind an existing item in the real world.

Things Are Constantly In Motion

It must be noted that these terms we’ve described are extremely fluid, and the technology and companies involved are constantly evolving and reframing exactly what these words mean.

For example, Microsoft calls its system ‘Mixed Reality’, whether it involves its AR-based HoloLens or its licensed VR headsets by Acer and Lenovo—even though there’s a pretty big operational difference between the two.

Apple (with AR Kit) and Google (AR Core) both refer to their kits as being for “AR” right in their names, but a lot of demos seem a bit more MR in nature, with monsters cavorting on your desktop in mock battles, and zombies chasing you down the street.

To make things even more confusing, there’s also “XR” or Extended Reality, which is often a term used to describe all three technologies of AR, MR, and VR together, but doesn’t include 360 Video.

Audio Development for Immersive Media: What You Need to Know

There has been an interest in making audio since it was first developed. Early mono recordings began to be replaced by stereo in 1958. Quadraphonic sound was developed in 1970, and by 1979 the first surround-sound movie debuted using Dolby 5.1. This was followed by 7.1 in 1992 and today, Dolby’s Atmos format can integrate into systems with as few as 6 and as many as 64 discrete speaker feeds.

Games and interactive media languished on this front however, until personal computers became powerful enough to manage the calculations for it. It wasn’t until 1999 when a 3D standard was developed, allowing distance attenuation and angle detection—though not elevation.

The Neumann KU 100 binaural dummy head with mics gives an extremely accurate binaural audio recording experience.

Binaural Audio

One of the most interesting audio recording methods that many audio professionals may already be familiar with is binaural audio. The basic theory is that stereo sound placement can be made more accurate for headphone users by re-creating the way the humans hear with physical model of the human head that has omnidirectional microphones placed inside it.

Binaural recording started back in the 1970s and has proven to be quite enduring—and increasingly popular. With a binaural microphone, the natural blockage of the head and ear shape affects the recording in terms of spectral response, giving a much better sense of sound placement for the listener.

Standard binaural recordings can come some of the way towards replicating the 3D feeling of a real sound source, and can handle sounds coming from different directions fairly well, but they do not create a full surround sound experience, especially in terms of elevation. This can be improved even further with HRTF technology, which we’ll talk about in a moment.

Spatial Audio in VR, AR and MR for Games: The ‘Dirty Secret’

3D audio has been a part of most AAA video games since 1999, when the standard was adopted across the industry.

Game engines like Unity and Unreal, and middleware platforms like FMOD and Wwise, have long had the ability to create convincing sound sources that can incorporate attenuation over physical distance, frontal angles up to 180 degrees or more, reasonable modelling of sound behind the player, and the filtering sound when its source is partially or completely occluded by an object.

The main difference when it comes to VR and AR and 360 video is that you can conceivably track rotation of the head independently of the body. As far as audio is concerned for games, this is more like evolution than revolution.

Rotating the player’s head separately from the body doesn’t really make a big difference in audio, and so there’s not a lot of innovation going on. Rather, the innovation in immersive audio is applying some of these same features, long known to gamers, to new formats like AR, 360 video and beyond.

Where it starts to get fun in games is when we can use newer enhanced forms of binaural audio to get a much more accurate sense of this placement.

HRTF Audio

Although binaural audio on its own doesn’t come close to a completely accurate 3D spatializing solution, it is an important part of our next spatializing system for VR, AR and 360 video. This is the “Head Related Transfer Function” or HRTF. It is now the most capable (and commonly available) spatializing system for these formats, and it can model how we hear sounds in a physical space much more accurately than binaural recording alone

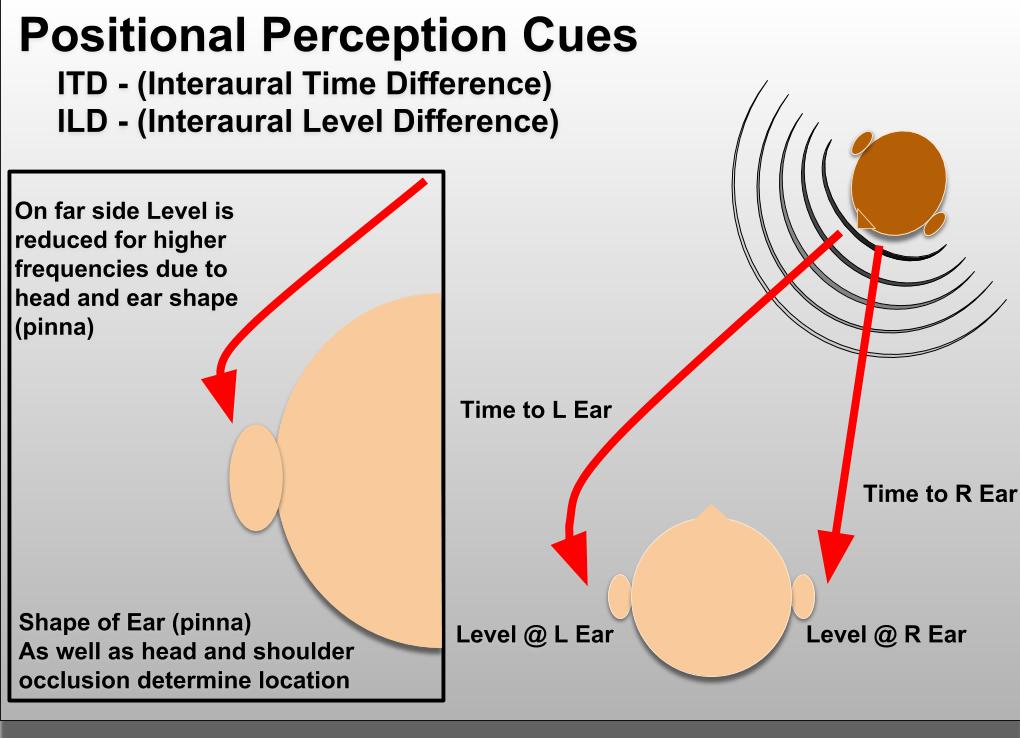

Humans and most animals have two ears—one on each side of our head—so that we can accurately locate sounds within our environment. We can perform this sophisticated calculation in our brains in an instant because sounds take a different length of time to reach each of our ears. This is referred to as “Interaural Time Difference” or ITD.

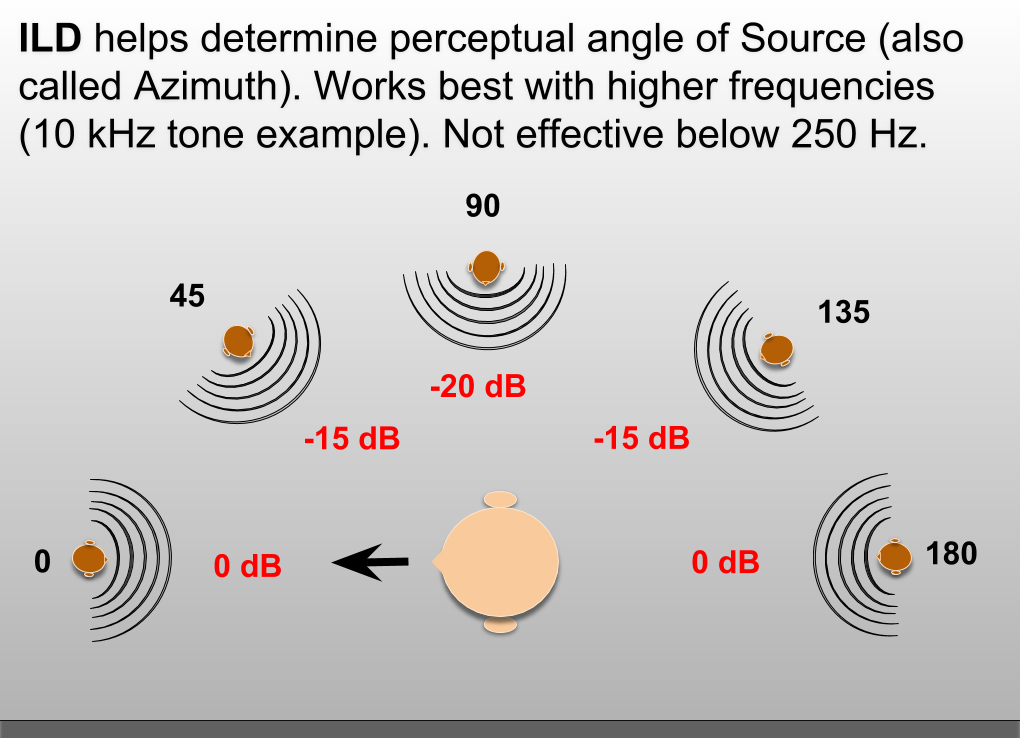

The perceived volume of these sounds is also different between each ear and that’s called “Interaural Level Difference” or ILD. Together, our minds can use these cues to instantly determine the angle, or azimuth, of a sound.

Not only are there differences in ITD and ILD for each ear, but different frequencies sent to the ears will also result in a different ITD/ILD combinations.

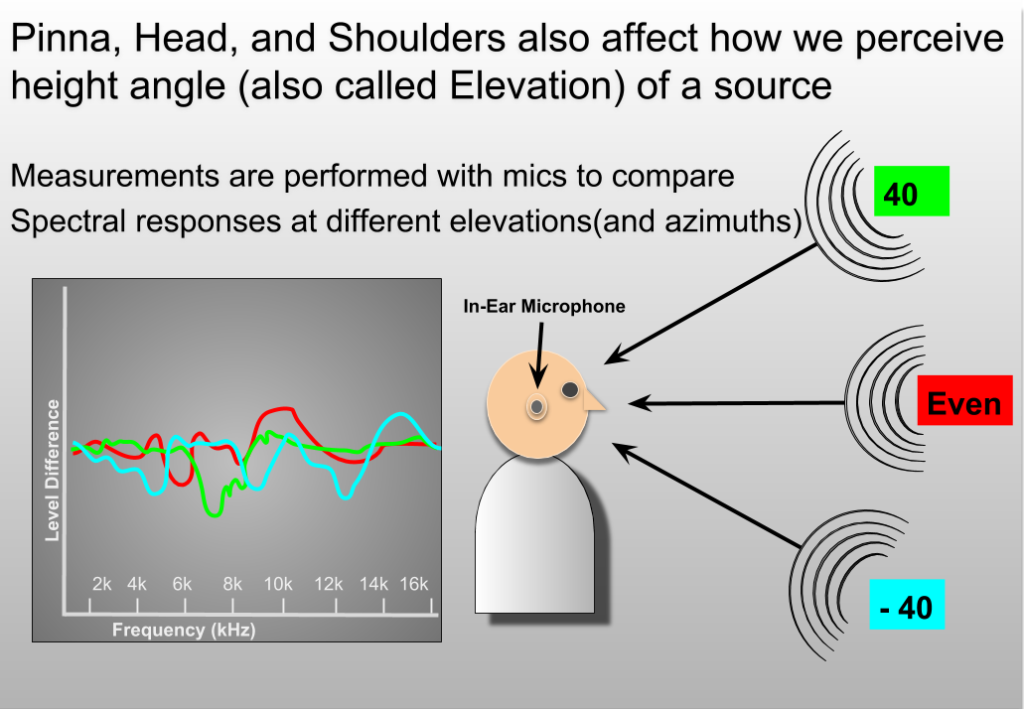

There’s been a tremendous amount of data recorded from test subjects wearing binaural microphones, in which the different values have been recorded for a full range of frequencies. The subject’s head and ear shape, as well as sounds bouncing off of the shoulders, all contribute to a fairly accurate picture of the full frequency spectrum. And since HRTF includes accurate elevation perception, it means all these tests are are also run at different elevations as well as different angles.

This recorded data is compiled into a HRIR or Head Related Impulse Response, one for each ear. You may already be familiar with the term “impulse response”, which is used a lot nowadays, especially for reverb effects. HRTF impulses use the same exact principle, called “convolution”, to modify a sound’s spectral quality so that it more convincingly seems to come from a specific location. HRTF can mimic angles and even full elevation very convincingly, though depression (having a sound come from below you) is still not as accurate.

Ambisonic Audio

Another technology that’s gaining in popularity is ambisonic audio. This movement started back in the 1970s in Britain with engineers who were dissatisfied with the audio performance of quadraphonic sound, especially since this format could not accurately reproduce height. Ambisonic audio was recorded first with a special device from British maker Calrec called the Soundfield microphone which featured 4 capsules arranged in a tetrahedral configuration.

This eventually developed into a way to encode signals from a 3D space into 4 channels and then be able to decode the outputs for a variety of systems, with any type of speaker setup. With this technology, the number of channels available has increased, thus improving the accuracy of spatial audio placement in the surround field.

There are two basic formats for Ambisonic audio, “A” and “B”.

A Format is the raw multichannel audio from each capsule on a microphone (or a number of microphones positioned over an area).

B Format is the encoded phase relationship of each of the channels from the A format audio, and it is highly dependent on the proper ordering of signals recorded in A format to accurately reproduce the spatial field.

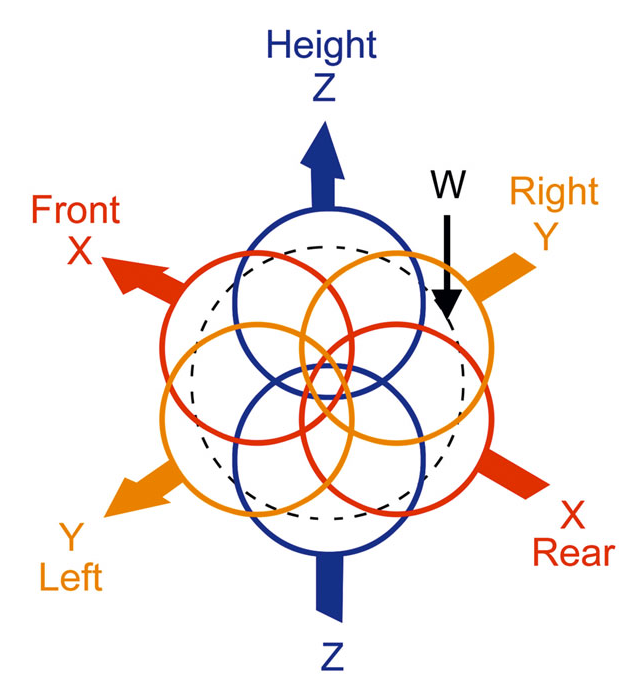

In a B Format file the four raw channels from the A format are configured like so:

W – the amplitude of all measuring points

X – the Front-Rear axis expressed as a phase value

Y – the Left-Right axis expressed as a phase value

Z – the Up-Down axis expressed as a phase value

This concept of phase values is quite complex, but it’s related to the same principles at work when you recreate a M/S (Mid Side) signal. In M/S where you have two channels of information (the mono capsule and the figure 8 capsule) you use an extra channel of your mixer with a reversed polarity on the figure 8 capsule to create a third channel from only two channels, yielding a wider stereo image. Ambisonics takes this same concept much much further.

Ambisonic Order Value

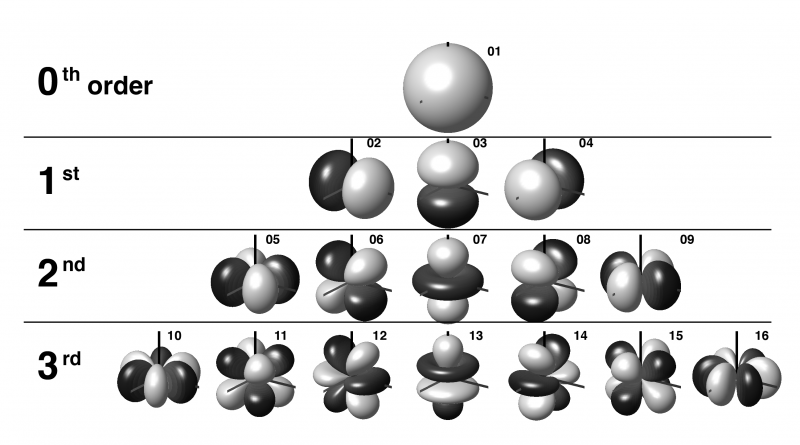

In this context “order” refers to the number or virtual points available in our B format file. Order effectively acts as a measurement of resolution. Greater order values mean more points of resolution and more precise perception. The most common Orders available are 1st (with 4 points), 2nd (with 9 points), and 3rd (with 16 points). Greater orders also mean that more calculations may be required to encode and decode the file accurately.

Some Facts About Ambisonic Audio

- Ambisonic files are best used as immersive audio for 360 movies because they work best with 3 DOF (rotation only) situations.

- They can can be used as ambiences in interactive settings. Think of them as a new kind of surround ambience, but in this case the sound will “stay in place” while your head and ears change the sound’s perspective.

- Generally, ambisonic files are more challenging to use for music, and it requires some work to maintain good balance in all head positions.

Now that we’ve covered a bit of the field and the various audio technologies involved in it, you’re probably wondering how you can get into doing this sort of immersive audio work yourself! Well, we have done A LOT of research on the matter. Now that you understand the basic nature of immersive media, be sure to tune in for our next article where we will contrast and compare the feature sets for various immersive audio tools in both DAWs and game engines.

In the meantime, if this article has gotten your attention and you’d love a chance to learn the practice of actually putting sound into a VR-oriented game, consider attending our 4 Day Game Audio Workshop Intensive at San Francisco State University. We’ll be spending one of those four days covering everything we can fill your heads up with concerning audio for VR, AR, MR and 360 video, and we’ll be putting it into practice together on a real VR-based game project for Unity.

That’s just one of the four days. On the rest, we’ll be talking about key concepts and techniques for designing for interactive media, using audio middleware platforms and applying it all into a working game engine as you learn the basics of how to get around in Unity 3D.

It will be a jam-packed experience you don’t want to miss! We get much deeper into everything we covered in this series of articles but hurry, as seats are limited and fill up fast! Sign Up Today to reserve your seat!

We started the Game Audio Institute based on our experience in the industry and the classroom, where we have been developing curriculum and teaching both graduate and undergraduate courses. To really get involved with the game audio industry these days, it is essential that you understand what’s under the hood of a game engine, and be able to speak the language of game design and implementation. You don’t have to be programmer (although that can’t hurt) but a general understanding of how games work is a must.

Unfortunately, all too often these days, people are missing out on developing a solid foundation in their understanding of interactive sound and music design. The GAI approach is fundamentally different. We want composers, sound designers, producers and audio professionals of all kinds to be familiar and comfortable with the very unique workflow associated with sound for games and interactive environments. We have many resources available for budding game audio adventurers to learn the craft, some of which we’ll be using in this series. To find out more about our Summer Hands-On Game Audio Intensive Workshop, Online Course, Unity Game Lessons or our book, just click over to our website gameaudioinstitute.com.

Steve Horowitz is a creator of odd but highly accessible sounds and a diverse and prolific musician. Perhaps best known as a composer and producer for his original soundtrack to the Academy Award-nominated film “Super Size Me”, Steve is also a noted expert in the field of sound for games. Since 1991, he has literally worked on hundreds of titles during his eighteen year tenure as audio director for Nickelodeon Digital, where he has had the privilege of working on projects that garnered both Webby and Broadcast Design awards. Horowitz also has a Grammy Award in recognition of his engineering work on the multi-artist release, True Life Blues: The Songs of Bill Monroe [Sugar Hill] ‘Best Bluegrass Album’ (1996) and teaches at San Francisco State University.

Scott Looney is a passionate artist, soundsmith, educator, and curriculum developer who has been helping students understand the basic concepts and practices behind the creation of content for interactive media and games for over ten years. He pioneered interactive online audio courses for the Academy Of Art University, and has also taught at Ex’pression College, Cogswell College, Pyramind Training, San Francisco City College, and San Francisco State University. He has created compelling sounds for audiences, game developers and ad agencies alike across a broad spectrum of genres and styles, from contemporary music to experimental noise. In addition to his work in game audio and education, he is currently researching procedural and generative sound applications in games, and mastering the art of code.

Please note: When you buy products through links on this page, we may earn an affiliate commission.

[…] Game Audio Primer: Immersive Sound for Virtual Reality, Augmented Reality and 360 Video June 7, 2019 by Scott Looney in News […]